Intel Launches Xeon 6900P, 128 P-Core Granite Rapids Arrives To Battle AMD EPYC

Intel Xeon 6 6900P Launch: All P-Core Xeons Arrive For HPC, Big Data And AI Data Centers

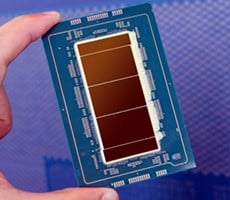

| Intel Xeon 6 6900P Series Processors Intel is launching its most powerful Xeon processors yet with the Xeon 6 6900P Series, featuring up to 128 P-cores and a massive 504MB of L3 cache for HPC and AI data center applications.

|

|||

|

|

||

Intel announced its Xeon 6 series of processors a few months back, which features two distinct product families – one powered by high-efficiency E-cores and the other by high-performance P-cores. Xeon 6 E-core based processors are optimized for high-density, scale out workloads, while the P-core based Xeon 6 processors are optimized for all-out per-core performance, for compute intensive workloads like big data analytics, HPC, and AI.

The Xeon 6 6700E series, code named Sierra Forest, launched back in June with up to 188 E-cores available per socket, targeting micro-services, networking and media applications. Today, the Xeon 6 6900P series arrives, featuring up to 128 high-performance P-cores per socket. Additional models in the 6900E, 6700P, 6500P and 6300P series targeting various use cases, are also in the works and due to arrive early next year.

The Intel Xeon 6 series leverages two different microarchitectures, and each microarchitecture excels in different ways. The E’s and P’s in the product names obviously denote E-cores and P-cores, respectively. This is an important distinction because the two chip families are suited for very different applications.

Intel is taking a multi-architecture approach with the Xeon 6 series in an attempt to better address ever-evolving market needs in the age of AI. Though they use disparate architectures, both families of processors use a common platform foundation and software stack. There will be two sockets at play with Intel's Xeon 6 offering, however, and depending on which core technology is being employed, different features and accelerators will be available for customers to take advantage of.

Under The Hood Of Intel's Xeon 6 6900P Series With P-Cores

There will be some overlap with the Xeon 6 6700 and 6900P series processors, but Intel is targeting them for very different workload requirements, in an effort to provide more optimized, tailored solutions for its customers. As such, it is important to understand how the Efficient E-cores and Performance P-cores differ and what features and capabilities may be present (or absent) in a particular Xeon 6 processor.For a deeper explanation on Sierra Forest and E-core powered Xeon 6 products, we suggest checking out our coverage from their initial launch in June – we’re not going to re-hash those details again here. Today’s announcements are all about Intel's Xeon 6 6900P Series for big-iron servers and AI, though we’ve got some Gaudi 3 AI accelerator-related updates to share as well.

As mentioned previously, the Xeon 6 6900P series features an all-P-core design with up to 128 cores per socket. The P-cores used on the Xeon 6 series are based on the Redwood Cove microarchitecture, similar to what’s used in Meteor Lake. Each core has its own, 2MB of L2 cache, along with 64KB on Instruction cache and 48KB of Data cache. The P-cores are wider, with 8-wide decode and 6-way allocate engines, with an 8-wide instruction retire.

Redwood Cove also features a deeper 512 instruction out-of-order execution engine and support for additional data types and the cores are capable of 1024 BF16/FP16 and 2048 Int8 Flops per cycle. Redwood Cove P-cores also features AES-256 bit and 2048 encryption key support, in addition to AVX-512 and Intel AMX.

In addition to standard DDR5, P-core based Xeon 6 processors also support MCR DIMMs, or Multiplexer Combined Ranks Dual In-line Memory Module. MCR DIMMs feature multiple DRAMs bonded to a PCB, where two ranks can operate simultaneously, for higher effective transfer rates of up to 8800MT/s, which results in a significant boost to available bandwidth.

The Xeon 6 6900P series will offer a couple of clustering modes for its memory as well. The memory connected to 6900P processors can be configured in a new flat HEX mode or SNC3 (Sub-NUMA Clustering). HEX mode is akin to SNC1, in that the memory is configured as a large, single pool. It is ideal for running in-memory database or similar memory capacity sensitive applications. Performance should remain consistent with applications looking for maximum memory capacity, that aren’t latency sensitive. Because all cores are linked in a fully connected mesh, it makes it harder to maintain latencies when communicating across compute dies in HEX mode, which has a latency impact. In those situations, SNC3 is recommended.

Xeon 6 series processors also feature support for CXL 2.0 – previous-gen 5th generation Xeon processors supported CXL 1.1. CXL 2.0 is compatible with all of the same devices, and features managed hot plug support, link bifurcation for multiple devices per port, and enhanced memory interleaving and QoS for higher effective bandwidth. The 6900 series cranks almost everything up a few notches versus the 6700E and previous-gen Xeons.

Intel Xeon 6 P-Core Processor Configurations

6900P series Xeon 6 processors will only support 1 or 2 socket configurations, and they feature 12 memory channels at up to 6400MT/s with DDR5 memory or up to 8800MT/s with MCR DIMMs. The processors also support up to 96 PCIe 5 lanes, and 6 UPI 2.0 links. Power per CPU also increases up to 500W.Intel is able to offer such a wide array of Xeon 6 configurations with varying core counts and features due to the inherent modularity of its advanced packaging technologies – including EMIB, module-die fabric, and tile-based, multi-die architecture.

Xeon 6 processors are built using modular compute and IO dies, linked using a high-speed fabric that traverses all die in the package. The compute dies – whether featuring E-cores or P-cores – are manufactured using the Intel 3 process, while the IO dies are manufactured on Intel 7. As their names suggest, the compute die is where all of the cores reside, and the IO die features the UPI, PCIe and CXL connectivity, in addition to the Intel Accelerator engines.

How Intel mixes and matches the compute and IO die, is what determines the series of Xeon 6 processor being built. For example, the Sierra Forest Xeon 6 6700E series is comprised of a pair of IO die with a single compute die, featuring up to 144 E-cores. The Xeon 6 6900P series also features a pair of IO die, but with three compute die featuring up to 128 P-cores.

Like the last couple of generations of Xeon Processors, the Xeon 6 series also features an array of accelerators to speed-up particular workloads, to compliment and offload the CPU cores. The full assortment of accelerators Intel has offered include:

- Intel Advanced Matrix Extensions (Intel AMX) – P-core models

- Intel Advanced Vector Extensions 512 (Intel AVX-512) – P-core models

- Intel Data Streaming Accelerator (Intel DSA)

- Intel In-Memory Analytics Accelerator (Intel IAA)

- Intel Dynamic Load Balancer (Intel DLB)

- Intel Advanced Vector Extensions (Intel AVX) for vRAN

- Intel QuickAssist Technology (Intel QAT)

- Intel Crypto Acceleration

Intel Xeon 6 6900P Series Model Line-Up

As mentioned earlier, Intel will be offering multiple families of Xeon 6 processors, with various configurations. The Sierra Forest-based 6700E series arrived a couple months back, the Granite Rapids 6900P which uses all “performance” or P-cores is officially launching today, and additional E- and P-core based models will be coming later in the year and in early 2025.

Today’s launch is comprised of five new models, ranging from 72 to 128 cores with varying base and boost clocks, with TDPs ranging from 400 – 500 watts. Yes, those TDPs are relatively high, but they are in-line with expectations for AMD’s upcoming Turin-based EPYC processors. The Xeon 6 6900P series also features up to a massive 504MB of L3 cache, and all offer a similar number of UPI links, PCIe lanes, etc.

Intel Announces Gaudi 3 AI Accelerator General Availability

Alongside the new Xeon 6 6900P, Intel is also announcing general availability of its Gaudi 3 AI accelerator, which competes against NVIDIA and AMD’s GPU-based AI offerings, and is meant to be paired with these latest high-performance Xeon 6 processors.

Intel’s Gaudi 3 AI accelerator is similar to Gaudi 2, but the architecture and platform is enhanced in a number of ways and optimized for large-scale generative AI. First, Gaudi 3 is manufactured on a newer, more advanced process node (5nm vs. 7nm), which improves power efficiency and allowed Intel to cram more transistors and hence incorporate more features into the chip, or more accurately chiplets/tiles that comprise a Gaudi 3.

The features of Gaudi 3 include 64 Tensor processor cores (TPCs) and eight matrix multiplication engines (MMEs) to accelerate deep neural network computations. 96MB of integrated SRAM cache, with a massive 12.8TB/s of bandwidth on tap, and 128GB of HBM2e memory with 3.7TB/s of peak bandwidth. 24 RoCE compliant 200Gb Ethernet ports are also part of the design, for flexible on-chip networking, without the need to use any proprietary interfaces or switches. The large 96MB SRAM cache and ability to handle large 256x256 matrixes are two aspects of Gaudi 3’s design that allow it to excel with large models like LLaMA80B and Falcon180B.

Gaudi 3 will be offered in an array of form factors, including Mezzanine cards and PCIe add-in cards. Systems can also be built around an x8 universal base board, and be air or liquid cooled. The variety of form factors and configurations, along with built-in industry-standard Ethernet networking and Intel’s open software tools, allows partners to scale systems to their specific needs, from 1 node all the way on up to 1024 node clusters, which can feature up to 8,192 accelerators and achieve exascale levels of compute capability.

We do have some additional details on the PCIe add in card, however. The Gaudi 3 PCIe CEM has a similar memory capacity to other form factors, and offers up to 1835 TFLOPS (FP8) of compute performance, with 8 MMEs, and 22 x 200GbE RDMA NICs. The card is a dual-slot- full height design, that’s 10.5” long and has a 600W TDP.

Intel also provided an array of performance claims for Gaudi 3 relative to NVIDIA’s Hopper-based GPUs, as it relates to AI training, inference and efficiency. According to Intel, Gaudi 3 delivers an average 50% improvement in inferencing performance with an approximate 40% improvement in power efficiency versus NVIDIA’s H100, but at a fraction of the cost.

Versus the NVIDIA H100, Intel expects Gaudi 3 to deliver approximately 1.19x better inferencing throughput with the LLaMA 2 70B parameter model, at roughly 2x the performance per dollar. And with the smaller, but newer LLaMA 3 8B parameter model, Intel claims Gaudi 3 will offer 1.09x better inference throughput versus H100, at about 1.8x performance per dollar.